Google AI Capable of Translating Unseen Language Pairs

Google is in the news yet again; this time because of its AI. Google’s AI is not only better at comprehending difficult languages like Mandarin but now it can even translate tow languages simultaneously. I think it is great news, as I use it to translate subtitles, songs, articles and pretty much all I need to translate. The program isn’t really trained to translate between two languages. As per a research paper, Google disclosed how the company made use of its very own ‘interlingua’ for the internal representation of phrases, irrespective of the language. The result of the aforementioned move is the deep learning ‘zero-shot’ that lets AI translate any pair of language with sufficient accuracy. The accuracy is valid as long as the Google AI has translated both the language into some other common language.

It was pretty recent when Google changed its Translate attribute to the in-depth learning GNMT or Google Neural Machine Translation system. Google is of the opinion that the aforementioned system is an end-to-end framework for learning that has its learning sources at millions of illustrations. Google adds further how the AI has improved drastically when it comes to the quality of translation. However, the problem lies in the working of the Google Translate with 103 different languages. In other words, the Translate deals with close to 5,253 language pairs for the purpose of translation. If people multiply the aforementioned figure by the millions of illustrations required for training, then it would be crazily CPU intensive.

Post the system’s training with different pair of languages such as English to Japanese and Korean to English, researchers are wondering whether they can translate a language pair which the system hasn’t learned to translate as of now. Thus, in simpler words, is the system capable of doing a zero-shot translation from Japanese to Korean or vice versa. Amazingly, the answer to the aforementioned question is yes. As per Google, the AI is capable of generating satisfactory translations from Korean to Japanese. The AI never learned to do it in the first place.

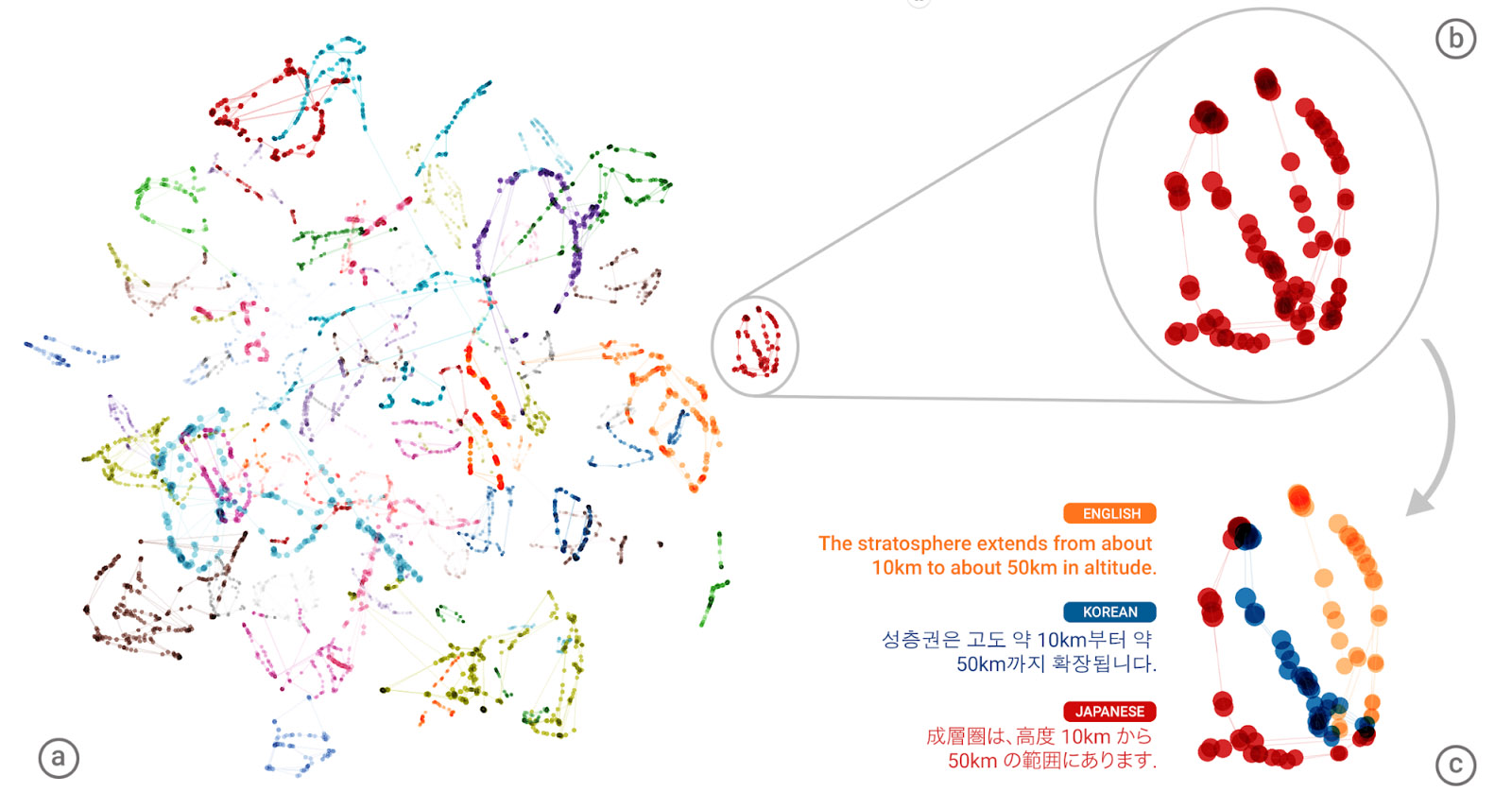

The researchers are not even entirely sure about how the AI works with translating unseen pair of languages. It is related to the in-depth learning networks that are infamously tough to understand. But, the researchers were capable of peeking into a language trio model by using a 3D representation of the internal data. When the researchers zoomed in, they noticed that the system was automatically grouping the sentences that had similar meanings from different languages.

Thus, in nature, Google AI ended up developing its very own internal representation ‘interlingua’ for same sentences or phrases. The researchers write that the network must be encoding things related to the semantics of the phrases or sentences instead of just memorising the sentence-to-sentence or phrase-to-phrase translations. The researchers interpret it as an existence sign of a network ‘interlingua’.

For example, in an experiment, 12 different pair of languages was merged together into a single pair model size. In spite of the severely diminished code base, researchers were able to get slightly lower level translation quality when compared to any kind of dual language model.